Sentiment: A Lab Notebook

1.

Positive, Negative, Neutral, Mixed

Amazon (NASDAQ: AMZN) sells many things, including books, clothes, spices, paint, furniture, weapons, stationery, blockchain services, customer relations management tools, sporting goods, and the ability to discern how you are feeling. Using the Amazon Comprehend service, any programmer can with a few lines of code send a piece of text (defined as a series of letters, not unlike these) to Amazon's cloud computers. Amazon Comprehend's machine learning models are used to perform “sentiment analysis”—that is, to determine the probability that the series of letters in question conveys or evinces one of four emotional valences: positive, negative, neutral, or mixed. For instance, sending Comprehend the sentence “I have wasted my life.” returns the following result:

'SentimentScore': {

'Positive': 0.08573993295431137,

'Negative': 0.8080717325210571,

'Neutral': 0.08350805938243866,

'Mixed': 0.022680217400193214,

}

One way of interpreting these numbers is that this sentence has an 81% chance of being negative, a 9% chance of being positive, an 8% chance of being neutral, and a 2% chance of being mixed. These numbers are similar to those produced by introspective human intelligence (a “ground truth”).

Likewise, sending Comprehend the sentence “Poetry changes nothing.” returns the following result:

'SentimentScore': {

'Positive': 0.052286338061094284,

'Negative': 0.5504230260848999,

'Neutral': 0.371753066778183,

'Mixed': 0.025537608191370964,

}

Comprehend believes that this sentence has a 55% percent chance of being negative but, compared to the previous sentence, is more likely to be neutral (a 37% chance). Once again, Comprehend is obviously correct.

Amazon's documentation explains the pricing model for Comprehend in great detail. The cost of determining the sentiment of a sequence of (up to 100) letters starts at $0.0001. Nowhere in this documentation does Amazon explain what is meant by positive, negative, neutral, or mixed.

The use-cases of this technology are myriad.

Or, for example, you can tell whether a book's ending is generally positive or generally negative or generally neutral or generally mixed before deciding to read it.

Or, for example, you can automatically identify social media users whose posts about a certain political figure are negative. And you can send them advertisements or arrest them.

Or, for example, you can monitor your own social media feed or diary entries to determine whether you have become more depressed of late, or whether this depression changes with the seasons or correlates with other factors such as your diet.

Or, for example, you can monitor the social media feed of your employer to discern when their emotions are more positive than usual; this is a good time to ask for a raise or to explain a costly mistake.

Or, for example, you can just read the comments of your blog and determine if your readers liked it.

Or, for example, you can host a blog in one language (English) whose readers exclusively post comments in a language that you do not understand (German) and you can determine if your readers enjoyed or despised a post without understanding anything that they have written about it.

Or, for example, since comparing oneself to one's friends on social media can lead to envy and diminished self-esteem, you can filter your social media feed to include only those of your friends' posts that suggest that they are actually failing at life.

Machine Learning

You have been hired to perform sentiment analysis. When you arrive at your desk and jiggle the mouse, a web browser opens up and begins to flash a series of sentences, one by one. The firm that has hired you does not tell you that these sentences have been collected from various books, websites, blogs, prayers, and court transcripts. These sentences are in a language you don't read—German—but each is given a label: positive, negative, neutral, or mixed. You are unfazed by the fact that you do not understand them; you were hired not for your linguistic acumen but for your precise ability to notice the obscure relationships between phenomena. In the interview, you accurately determined that your brother tends to get the hiccups three to four days after consuming cinnamon and that the price of natural gas tends to rise when the movie atop the global box office is a sequel.

As the screen flashes these sentences, one by one, you compile an intricate memory of the relationships between sequences of words and the labels. In sentences labeled positive you often see the two-word sequence “Ich liebe.” In sentences labeled negative you often see the two-word sequence “Ich hasse.” Other correlations are more subtle. Perhaps sentences labeled positive are slightly more likely to contain “der Tag” and those labeled negative are more likely to contain the phrase “die Verletzung.” Perhaps sentences labeled neutral contain the word “obwohl” more frequently or are often just a bit longer than other sentences.

One day you come in and something has changed: the German sentences no longer have labels. Your boss informs you that your training is over and that now you must label each sentence as positive, negative, neutral, or mixed. Approximately 91% of the time, you are correct.

When you lose your job because you have been replaced by Amazon Comprehend, which does almost exactly the same thing but much faster, you are ashamed to tell your brother. Recently you have spent a lot of time watching Netflix, muted and with the German subtitles activated. You prefer those films that go HAPPY, SAD, HAPPY to other structures, such as SAD, HAPPY, SAD and SAD, HAPPY, SAD, HAPPY. But it is better when they are not so happy at the end, and not for any great length of time (“Man in Hole” Type 1).

Adversarial Encounters

Typically one tests a machine learning model by calculating how well its predictions fare on some amount of data that it has not seen (“hold-out data”). One may also generate “adversarial examples,” attempts to trick the model, revealing its illogics, neuroses, and fissures.

The Task: Compose a text (a series of letters) that Amazon Comprehend thinks is positive but is actually negative, or vice versa.

The Result: Intuitive experimentation revealed that one way to trick Amazon's machine learning model is by assuming a religious posture, specifically a yearning for sainthood, a state in which certain forms of otherwise-negative experience (to be broken, impoverished, sacrificed, pierced, afflicted) shall ultimately be redeemed (i.e. made positive). For instance, the deeply positive sentiment of the phrase:

“hungry to be occluded, poor in spirit, gloriously torn by wolves”

is incorrectly comprehended by Amazon Comprehend:

'SentimentScore': {

'Positive': 0.0017081353580579162,

'Negative': 0.6465626955032349,

'Neutral': 0.3145301043987274,

'Mixed': 0.03719902038574219,

}

2.

An Experiment/A Form

One need not use Amazon Comprehend to determine whether one is sad or happy or neutral or some combination of these feelings. It is increasingly easy to train machine learning models on your own computer, expending only a modest quantity of electricity.

The Apparatus: Text posts were downloaded en masse from Tumblr. Some of these were posts that users of this site had tagged as #inspiring or #inspiration (positive). Others were tagged #depressing, #depressed, #sad, or #sadness (negative).

The Python programming language, which comes pre-installed on Mac/Linux computers and is also easy to install on Windows computers, is increasingly popular for machine learning tasks. Using Python's Natural Language Toolkit, a machine learning model was trained to distinguish between the positive and negative sentences.

This model is of the same genus as the one used by Amazon Comprehend, but not the same species. One way of explaining the difference is that this model is worse; fed less data, and with a much simpler algorithm for recognizing patterns, it does not do as good a job of predicting whether a sentence is positive or negative (and it knows no neutral or mixed). In this respect, there is no competing with Amazon, which has near-limitless computational resources (in terms of data, electricity, and engineers).

Another way of explaining the difference is that this model is better; its understanding of what makes something positive vs. negative is not totally random — there is some truth in it—but it differs more generously from a “common sense” or “general” understanding of what makes a sentence seem positive or negative. Let us call this mysterious tendentiousness, this particular falsehood, a personality.

“For example, you can use sentiment analysis to determine the sentiments of comments on a blog posting to determine if your readers liked the post.” Or, for example, you can perform bespoke PSYOPs. Or, for example, you can use the model to change the way you write poetry, specifically lyric poetry, which has something to do with emotions, with the precision with which emotions are recalled, and with the accuracy, and with their placement into a time-series.

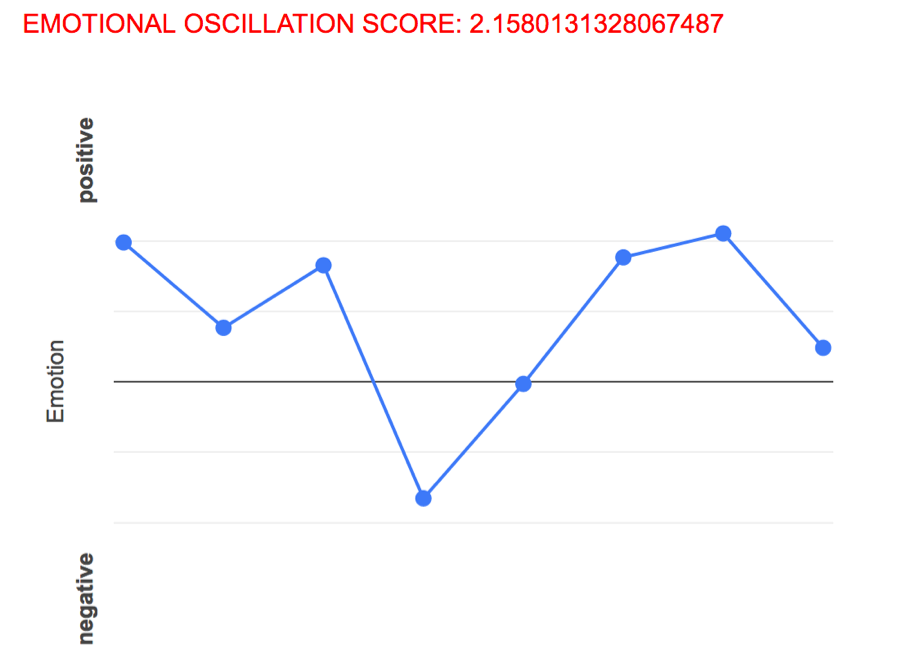

The Method: A new measurement was defined: a poem's EMOTIONAL OSCILLATION SCORE is equal to the sum of the absolute values of the differences between the sentiment scores of all pairs of adjacent sentences. A game was played (and others too can play this game): maximize the EMOTIONAL OSCILLATION SCORE, writing a very positive sentence then a very negative one, + then -, up then down, an alternating current of feeling or feeling-like words. The form itself, like any poetic form, may have metaphorical resonances (with the image a body thrusting itself up and down to perform barbell squats, with the jarring emotional juxtapositions of a Twitter feed) but is, at last count, arbitrary.

The Result: A poem titled “Window Scene (Evolved via Algorithmic Guidance to Maximize Emotional Oscillation).”

3.

The Poem

Window Scene (Evolved via Algorithmic Guidance to Maximize Emotional Oscillation) i. A Nissan with bikes on the back. The family wearing orange shorts together, how frightening. Eight trees of similar humorlessness: the foreground, the middle distance, the background. The girl in the red sweater jumps over the fence, gently bruising. And behind, the dense gray brown thicket of January and this particular latitude. If you could you would drive all the cars at once, the way that other people play the piano or maintain many friendships via text messaging. Or like a tree that looks like it contains blackbirds but is really blackbirds all the way down. Blackbirds are really purple.

***

ii. A Nissan with bikes on the back. The family devouring orange blood together, how frightening. Eight loves of similar minds: the foreground, the middle distance, the background. The girl in the red sweater jumps over the fence, bruising and breaking. And behind, the dense gray brown thicket of tomorrow and this particular memory. If you could you would drive all the cars at once, the way that other people play the piano or maintain many nightmares via text messaging. Or like a tree that looks like it contains blackbirds but is really blackbirds all the way down. Bones are really purple.

***

iii. A dog with bikes on its back. My family devouring orange blood together, how frightening. Eight loves of similar minds: the foreground, the middle distance, the soul. The girl in the red sweater jumps over the fence, bruising and breaking. And behind, the dense gray brown thicket of tomorrow and this particular memory. If you could you would drive all the cars at once, the way that other people play the piano or maintain many nightmares via text messaging. Or like a tree that looks like it contains blackbirds but is really blackbirds all the way down. Bones are really purple.

***

iv. A dog with bikes on its back. My family devouring orange blood together, how frightening. Eight loves of similar minds: the foreground, the middle distance, the soul. The girl in the red sweater jumps over the fence, bruising and breaking. And behind, the dense gray brown thicket of tomorrow and this particular memory. If you could you would kill all the cars at once, the memory that the priests scream, and save many nightmares via text messages. Or like a tree that looks like it contains blackbirds but is really blackbirds all the way down. Bones are really purple.

***

v. A dog with bikes on its back. My family devouring orange blood together, how frightening. Eight loves of similar minds: the foreground, the middle distance, the soul. The girl in the red sweater jumps over the fence, bruising and breaking. And behind, the dense gray brown thicket of tomorrow and this particular memory. If you could you would kill all the cars at once, the memory that the priests scream, and save many nightmares via text messages. Or like a tree that looks like it contains blackbirds but is really blackbirds all the way down. My bones (for once in my life) are really purple like I want yours to be.

Conclusion: We hypothesized that radical swings in affective posture would make the writer more emotionally flexible. Likewise, we hypothesized that attempting to discern the emotional valences of a machine learning model derived from achingly sensitive Tumblr posts would make the writer more empathetic. Unfortunately, no conclusions could be drawn from a single poem.